Types of Vision sensors:

- Vision Sensors:

Vision sensors, including cameras and depth sensors, provide robots with the ability to see and interpret visual information from their surroundings. Cameras capture images and videos, allowing robots to identify objects, recognize patterns, and navigate through environments. Depth sensors, such as LiDAR (Light Detection and Ranging) and time-of-flight cameras, measure distances to objects in the environment, enabling robots to perceive depth and generate 3D maps for navigation and obstacle avoidance.

Types of Vision Sensors:

- Camera Sensors:

Camera sensors capture images and videos of the robot’s surroundings, providing visual data for analysis and interpretation. These sensors utilize various imaging technologies, including charge-coupled devices (CCDs) and complementary metal-oxide-semiconductor (CMOS) sensors, to capture light and convert it into digital signals. Cameras come in different configurations, such as monochrome, color, and infrared, offering flexibility for different applications and environments.

Camera Sensors in Robotics: Capturing the Vision of Tomorrow

In the ever-evolving landscape of robotics, where perception and interaction with the environment are paramount, camera sensors serve as the indispensable eyes of robots. These sensors, equipped with advanced imaging technology, empower robots to perceive visual information, navigate through complex environments, and interact with objects with unparalleled precision and accuracy. Join us on an immersive journey through the realm of camera sensors, uncovering their types, functionalities, and transformative role in shaping the future of robotics.

Types of Camera Sensors:

- Charge-Coupled Device (CCD) Sensors:

CCD sensors are traditional image sensors that convert light into electrical signals. They consist of an array of pixels, each capable of capturing light and generating a corresponding electrical charge. CCD sensors are known for their high-quality image output, low noise levels, and excellent dynamic range, making them suitable for applications requiring high-resolution imaging and low-light performance.

- Complementary Metal-Oxide-Semiconductor (CMOS) Sensors:

CMOS sensors are the most common type of image sensors used in modern cameras and smartphones. They employ a different technology than CCD sensors, with each pixel containing its own amplifier and analog-to-digital converter. CMOS sensors offer advantages such as lower power consumption, faster readout speeds, and higher integration levels, making them ideal for applications requiring compact size, high frame rates, and low-cost imaging solutions.

- Global Shutter Sensors:

Global shutter sensors capture images by exposing all pixels simultaneously and then read out the entire image in one go. This enables them to capture fast-moving objects without distortion or motion blur. Global shutter sensors are commonly used in robotics for applications such as motion tracking, object recognition, and high-speed imaging.

- Rolling Shutter Sensors:

Rolling shutter sensors capture images by scanning the image row by row, resulting in a slight time delay between the exposure of different rows. While rolling shutter sensors are more susceptible to motion artifacts and distortion, they are often more cost-effective and offer higher resolution options than global shutter sensors.

- Time-of-Flight (ToF) Sensors:

ToF sensors measure the time it takes for light to travel from the sensor to objects in the scene and back, enabling them to capture depth information along with color images. ToF sensors are used in robotics for applications such as 3D mapping, obstacle detection, and gesture recognition, where accurate depth perception is critical.

Functionalities of Camera Sensors:

- Image Capture and Processing:

Camera sensors enable robots to capture high-resolution images of the environment, providing valuable visual information for perception and decision-making. By analyzing images captured by cameras, robots can identify objects, detect features, and extract meaningful information to navigate through complex environments and interact with objects with precision and accuracy.

- Object Detection and Recognition:

Camera sensors facilitate object detection and recognition for robots by analyzing visual features such as color, shape, and texture. By employing techniques such as machine learning and computer vision algorithms, robots can identify objects within the scene, classify them into categories, and localize them with respect to their position and orientation.

- Navigation and Localization:

Camera sensors enable robots to navigate through environments and localize themselves with respect to landmarks or reference points. By capturing images of the surroundings and comparing them to pre-existing maps or reference images, robots can determine their position, plan optimal paths, and navigate to desired locations with precision and accuracy.

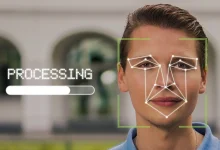

- Gesture and Facial Recognition:

Camera sensors enable robots to interact with humans in a natural and intuitive manner by recognizing gestures, facial expressions, and body movements. By analyzing images of human faces and gestures, robots can infer the intentions and emotions of humans and respond accordingly, enhancing the usability and effectiveness of human-robot interaction.

- Scene Understanding and Interpretation:

Camera sensors enable robots to understand and interpret the visual scene, extracting meaningful information from images to make intelligent decisions. By analyzing visual cues such as edges, corners, and textures, robots can infer the spatial layout of the environment, identify objects of interest, and perform tasks such as scene reconstruction, semantic segmentation, and visual odometry.

Significance of Camera Sensors in Robotics:

- Enhanced Perception and Situational Awareness:

Camera sensors provide robots with enhanced perception and situational awareness of their surroundings, enabling them to navigate through complex environments and interact with objects with precision and accuracy. By capturing high-resolution images and analyzing visual features, sensors enable robots to understand the spatial layout of the environment, identify obstacles, and make informed decisions based on visual cues.

- Autonomous Operation and Intelligent Behavior:

Camera sensors enable autonomous operation and intelligent behavior for robots, allowing them to perceive and interpret the environment without human intervention. By employing machine learning and computer vision algorithms, robots can analyze images in real-time, localize themselves, plan optimal paths, and interact with objects autonomously, driving innovation and advancement in robotics technology.

- Flexible and Adaptive Interaction:

Camera sensors enable robots to exhibit flexible and adaptive interaction with the environment and humans, responding to changes in the environment or user inputs. By detecting visual cues such as gestures, facial expressions, or object movements, robots can adapt their behavior and interaction strategies accordingly, enhancing the usability and effectiveness of human-robot interaction.

- Safety and Reliability:

Camera sensors enhance safety and reliability in robotics applications by enabling robots to detect and respond to hazards or changes in the environment. By continuously monitoring the surroundings for obstacles, humans, or other objects, sensors ensure that robots operate safely and avoid collisions or accidents, driving innovation and advancement in robotics technology.

- Advanced Robotics Applications:

Camera sensors enable robots to perform advanced tasks and applications that require perception, recognition, and understanding of the visual scene. From industrial automation and inspection to autonomous vehicles and drones, camera sensors are integral components of robotics systems across various domains. By providing robots with the ability to perceive and interpret visual information, sensors enable them to perform complex tasks with precision and efficiency, driving innovation and advancement in robotics technology.

In conclusion, camera sensors stand as indispensable components in the realm of robotics, providing robots with enhanced perception, navigation, and interaction capabilities essential for autonomous operation and intelligent behavior. From object detection and recognition to navigation and human-robot interaction, camera sensors play a pivotal role in shaping the future of robotics, enabling robots to perceive and understand the world around them with precision and accuracy. As robotics technology continues to advance and evolve, the significance of camera sensors in robotics applications will only continue to grow, driving innovation, enabling new capabilities, and revolutionizing industries across the globe.

- Depth Sensors:

Depth sensors measure the distance to objects in the environment, enabling robots to perceive depth and generate three-dimensional (3D) representations of the scene. Common types of depth sensors include time-of-flight (ToF) cameras, structured light sensors, and stereo vision systems. Depth sensors provide valuable information for obstacle detection, navigation, and object recognition in robotics applications.

Depth Sensors in Robotics: Unveiling the Third Dimension of Perception

In the intricate landscape of robotics, where interaction with the environment demands precision and understanding, depth sensors emerge as the pivotal components, bestowing robots with the ability to perceive depth and spatial information. These sensors, akin to the eyes of robots, enable them to navigate through complex environments, detect obstacles, and interact with objects with unparalleled accuracy. Join us on an immersive exploration of depth sensors, unraveling their types, functionalities, and transformative role in shaping the realm of robotics.

Types of Depth Sensors:

- Time-of-Flight (ToF) Sensors:

Time-of-Flight sensors measure the time it takes for light or infrared signals to travel from the sensor to objects in the scene and back. By calculating the time delay, ToF sensors can determine the distance to objects, thus providing depth information. These sensors offer fast and accurate depth sensing capabilities, making them suitable for applications such as 3D mapping, gesture recognition, and object tracking.

- Structured Light Sensors:

Structured light sensors project a pattern of light onto the scene and analyze the deformation of the pattern caused by the objects’ surfaces. By measuring the distortion of the pattern, structured light sensors can calculate depth information with high precision. These sensors are widely used in robotics for tasks such as 3D scanning, object recognition, and augmented reality applications.

- Stereo Vision Systems:

Stereo vision systems consist of two or more cameras positioned at different viewpoints to mimic the human binocular vision. By comparing the disparities between the images captured by each camera, stereo vision systems can calculate depth information using triangulation principles. These systems offer accurate and robust depth sensing capabilities, making them suitable for applications such as navigation, obstacle avoidance, and 3D reconstruction.

- Laser Range Finders:

Laser range finders emit laser beams and measure the time it takes for the beams to reflect off objects and return to the sensor. By analyzing the time-of-flight of the laser pulses, laser range finders can calculate accurate distance measurements, thus providing depth information. These sensors are commonly used in robotics for tasks such as localization, mapping, and object detection in outdoor environments.

- Depth Cameras:

Depth cameras integrate depth sensing technology with traditional RGB cameras to capture color images along with depth information. These cameras employ techniques such as structured light or ToF sensing to measure depth accurately, enabling robots to perceive the three-dimensional structure of the environment. Depth cameras are widely used in robotics for applications such as augmented reality, virtual reality, and human-robot interaction.

Functionalities of Depth Sensors:

- Obstacle Detection and Avoidance:

Depth sensors enable robots to detect obstacles in their path and navigate through environments safely. By providing depth information, sensors allow robots to perceive the spatial layout of the environment and plan collision-free paths to avoid obstacles effectively. Obstacle detection and avoidance functionalities are crucial for autonomous robots operating in dynamic environments, ensuring safe and efficient navigation.

- Object Recognition and Localization:

Depth sensors facilitate object recognition and localization for robots by providing accurate depth information. By analyzing depth maps generated by sensors, robots can identify objects within the scene, classify them into categories, and localize them with respect to their position and orientation. Object recognition and localization functionalities are essential for tasks such as pick-and-place, sorting, and inspection in robotics applications.

- 3D Mapping and Reconstruction:

Depth sensors enable robots to create detailed 3D maps of the environment by capturing depth information from multiple viewpoints. By combining depth maps with RGB images, robots can reconstruct the three-dimensional structure of the scene, enabling tasks such as 3D mapping, localization, and navigation. 3D mapping and reconstruction functionalities are crucial for autonomous robots operating in unstructured or unknown environments, providing valuable spatial information for decision-making and navigation.

- Gesture and Human Pose Recognition:

Depth sensors enable robots to recognize gestures and human poses with high accuracy. By analyzing depth images of human bodies, robots can detect key points such as joints and limbs, enabling gesture recognition and human pose estimation. Gesture and human pose recognition functionalities enhance the usability and effectiveness of human-robot interaction, enabling intuitive and natural communication between humans and robots.

- Augmented Reality and Virtual Reality:

Depth sensors enable robots to interact with virtual objects and augment the physical environment with digital content. By capturing depth information from the scene, sensors enable augmented reality and virtual reality applications, allowing robots to overlay virtual objects onto the real world or create immersive virtual environments for users. Augmented reality and virtual reality functionalities enhance the user experience and enable new forms of interaction and visualization in robotics applications.

Significance of Depth Sensors in Robotics:

- Enhanced Perception and Situational Awareness:

Depth sensors provide robots with enhanced perception and situational awareness of their surroundings, enabling them to navigate through complex environments and interact with objects with precision and accuracy. By capturing accurate depth information, sensors enable robots to understand the spatial layout of the environment, detect obstacles, and make informed decisions based on the scene’s geometry.

- Autonomous Operation and Intelligent Behavior:

Depth sensors enable autonomous operation and intelligent behavior for robots by providing accurate depth information for perception and decision-making. By analyzing depth maps in real-time, robots can localize themselves, plan optimal paths, avoid obstacles, and interact with objects autonomously, driving innovation and advancement in robotics technology.

- Flexible and Adaptive Interaction:

Depth sensors enable robots to exhibit flexible and adaptive interaction with the environment and humans, responding to changes in the environment or user inputs. By detecting gestures, human poses, and object positions accurately, sensors enable robots to adapt their behavior and interaction strategies accordingly, enhancing the usability and effectiveness of human-robot interaction.

- Safety and Reliability:

Depth sensors enhance safety and reliability in robotics applications by enabling robots to detect and respond to hazards or changes in the environment. By continuously monitoring the surroundings for obstacles, humans, or other objects, sensors ensure that robots operate safely and avoid collisions or accidents, driving innovation and advancement in robotics technology.

- Advanced Robotics Applications:

Depth sensors enable robots to perform advanced tasks and applications that require perception, recognition, and understanding of the three-dimensional world. From navigation and object manipulation to augmented reality and virtual reality, depth sensors are integral components of robotics systems across various domains. By providing robots with accurate depth information, sensors enable them to perform complex tasks with precision and efficiency, driving innovation and advancement in robotics technology.

In conclusion, depth sensors stand as indispensable components in the realm of robotics, providing robots with enhanced perception, navigation, and interaction capabilities essential for autonomous operation and intelligent behavior. From obstacle detection and 3D mapping to human-robot interaction and augmented reality, depth sensors play a pivotal role in shaping the future of robotics, enabling robots to perceive and understand the three-dimensional world with precision and accuracy. As robotics technology continues to advance and evolve, the significance of depth sensors in robotics applications will only continue to grow, driving innovation, enabling new capabilities, and revolutionizing industries across the globe.

- LiDAR Sensors:

LiDAR (Light Detection and Ranging) sensors emit laser pulses and measure the time it takes for the pulses to reflect off objects in the environment. By scanning the laser beams in different directions, LiDAR sensors create detailed 3D maps of the surroundings, with precise measurements of distance and spatial information. LiDAR sensors are widely used for mapping, localization, and navigation in autonomous robots and vehicles.

- Motion Sensors:

Motion sensors detect changes in the robot’s motion or the motion of objects in the environment. These sensors include accelerometers, gyroscopes, and inertial measurement units (IMUs), which provide information about the robot’s acceleration, angular velocity, and orientation. Motion sensors enable robots to stabilize their movements, detect collisions, and navigate through dynamic environments with agility and precision.

- Infrared Sensors:

Infrared sensors detect infrared radiation emitted by objects in the environment, providing information about temperature, heat distribution, and thermal signatures. These sensors are used for applications such as heat detection, thermal imaging, and object tracking in robotics. Infrared sensors enable robots to perceive and interact with objects based on their thermal properties, enhancing situational awareness and object recognition capabilities.

Functionalities of Vision Sensors:

- Object Detection and Recognition:

Vision sensors enable robots to detect and recognize objects in their surroundings, distinguishing between different shapes, colors, and textures. By analyzing visual data from cameras and depth sensors, robots can identify objects, classify them into categories, and localize them within the environment. Object detection and recognition are essential for tasks such as pick-and-place operations, sorting, and assembly in robotics applications.

- Obstacle Avoidance and Navigation:

Vision sensors facilitate obstacle avoidance and navigation in robotics applications, allowing robots to navigate through complex environments safely and efficiently. LiDAR sensors and depth cameras provide depth information for creating obstacle maps and planning collision-free trajectories. By integrating vision sensors with path planning algorithms, robots can autonomously navigate through cluttered spaces and avoid collisions with obstacles.

- Localization and Mapping:

Vision sensors play a crucial role in localization and mapping for autonomous robots and vehicles. Depth sensors and LiDAR sensors generate detailed maps of the environment, while camera sensors provide visual landmarks for localization. By fusing data from multiple sensors and performing simultaneous localization and mapping (SLAM), robots can determine their position and orientation relative to the surroundings, enabling autonomous navigation and exploration.

- Gesture and Pose Estimation:

Vision sensors enable robots to interpret human gestures and estimate the pose of objects in the environment. Camera sensors capture images of human gestures or object poses, which are then analyzed using computer vision algorithms to infer the intended actions or orientations. Gesture and pose estimation are used for human-robot interaction, object manipulation, and control in robotics applications.

- Quality Inspection and Monitoring:

Vision sensors are used for quality inspection and monitoring in manufacturing and industrial robotics applications. Camera sensors capture images of products or components, which are analyzed for defects, anomalies, or deviations from specifications. Vision-based inspection systems enable robots to perform automated quality control, ensuring product quality and consistency in manufacturing processes.

Significance of Vision Sensors in Robotics:

- Enhanced Perception and Understanding:

Vision sensors provide robots with enhanced perception and understanding of the environment, enabling them to interpret visual information and make informed decisions autonomously. By capturing images and videos of the surroundings, vision sensors enable robots to recognize objects, detect obstacles, and navigate through complex environments with precision and accuracy.

- Autonomous Operation and Navigation:

Vision sensors enable autonomous operation and navigation for robots, allowing them to navigate through dynamic environments without human intervention. By analyzing visual data and generating 3D maps of the surroundings, robots can plan collision-free trajectories, localize themselves within the environment, and adapt their behavior based on changing conditions. Vision-based navigation systems are essential for applications such as autonomous vehicles, drones, and mobile robots.

- Flexible and Adaptive Behavior:

Vision sensors enable robots to exhibit flexible and adaptive behavior in response to changes in the environment or task requirements. By analyzing visual data in real-time, robots can adjust their actions, trajectory, or manipulation strategy to accommodate variations in object position, shape, or orientation. Vision-based control systems allow robots to adapt to unforeseen circumstances and perform tasks with agility and efficiency.

- Human-Robot Interaction:

Vision sensors facilitate human-robot interaction by enabling robots to perceive and interpret human gestures, expressions, and intentions. By capturing images of human gestures or facial expressions, vision sensors enable robots to understand and respond to human commands, gestures, and emotions. Vision-based interaction systems enhance the user experience and promote seamless collaboration between humans and robots in various domains, including healthcare, education, and entertainment.

- Innovation and Advancement:

Ongoing research and development in vision sensor technologies drive innovation and advancement in the field of robotics, unlocking new possibilities for performance, functionality, and application. From advancements in sensor resolution and image processing algorithms to innovations in sensor fusion and artificial intelligence, vision sensors continue to evolve, enabling robots to push the boundaries of perception, interaction, and autonomy.

In conclusion, vision sensors stand as essential components in the realm of robotics, providing robots with perception, awareness, and interaction capabilities essential for autonomous operation and intelligent behavior. From cameras capturing visual data to LiDAR sensors generating 3D maps, vision sensors enable robots to see and understand the world around them with precision and accuracy. As robotics technology continues to advance and evolve, the significance of vision sensors as the eyes of robots remains steadfast, shaping the future of automation and human-machine interaction.

- Inertial Sensors:

Inertial sensors, such as accelerometers and gyroscopes, measure the acceleration and angular velocity of a robot’s body in three-dimensional space. These sensors provide information about the robot’s orientation, velocity, and changes in motion, facilitating stabilization, motion control, and navigation. Inertial sensors are essential for applications such as balancing robots, inertial navigation systems, and gesture recognition.

Inertial Sensors: Navigating the Path of Robotics

In the intricate world of robotics, where precision, agility, and adaptability are paramount, inertial sensors stand as fundamental components, providing crucial input for navigation, motion control, and stabilization. Serving as the ears and internal compass of robots, inertial sensors enable them to perceive changes in motion, orientation, and acceleration, facilitating autonomous operation and intelligent behavior. Join us on an in-depth exploration of inertial sensors, unraveling their types, functionalities, and transformative role in shaping the landscape of modern robotics.

Types of Inertial Sensors:

- Accelerometers:

Accelerometers measure the acceleration of a robot’s body in three-dimensional space, providing information about changes in velocity and direction. These sensors typically consist of microelectromechanical systems (MEMS) components, such as microstructures or capacitive plates, which deform in response to acceleration forces. By measuring the deformation, accelerometers can determine the magnitude and direction of acceleration along different axes, enabling robots to detect changes in motion and velocity.

- Gyroscopes:

Gyroscopes measure the angular velocity or rate of rotation of a robot’s body around different axes, providing information about changes in orientation and angular motion. These sensors utilize principles such as the Coriolis effect or mechanical resonance to detect rotational movement. Gyroscopes can be either mechanical gyroscopes or MEMS-based gyroscopes, with MEMS gyroscopes being more common in modern robotics due to their smaller size, lower cost, and higher precision.

- Inertial Measurement Units (IMUs):

Inertial Measurement Units (IMUs) combine accelerometers and gyroscopes into a single integrated sensor package, providing comprehensive information about a robot’s motion and orientation. IMUs typically consist of multiple accelerometers and gyroscopes arranged along different axes, along with additional components such as magnetometers or barometers for detecting magnetic fields or atmospheric pressure. By fusing data from multiple sensors, IMUs can provide accurate measurements of position, velocity, and orientation in three-dimensional space.

Functionalities of Inertial Sensors:

- Motion Sensing and Analysis:

Inertial sensors enable robots to sense and analyze changes in motion, velocity, and acceleration, providing valuable information for motion control and navigation. Accelerometers detect linear acceleration along different axes, while gyroscopes measure angular velocity around different axes. By integrating data from accelerometers and gyroscopes, robots can analyze their motion profile, detect changes in velocity, and adjust their trajectory or velocity accordingly.

- Orientation Estimation and Stabilization:

Inertial sensors facilitate orientation estimation and stabilization for robots, enabling them to maintain a stable posture or orientation in dynamic environments. Gyroscopes provide continuous measurements of angular velocity, allowing robots to track changes in orientation and adjust their orientation in real-time. By combining gyroscope data with accelerometer data, robots can estimate their current orientation relative to a reference frame and implement stabilization algorithms to maintain a desired orientation.

- Dead Reckoning and Localization:

Inertial sensors enable dead reckoning and localization for robots, allowing them to estimate their position and trajectory based on inertial measurements. By integrating acceleration and angular velocity over time, robots can calculate changes in position and orientation relative to a starting point. Dead reckoning is often used in combination with other localization techniques, such as GPS or visual odometry, to provide accurate position estimates for autonomous navigation in robotics applications.

- Motion Gesture Recognition:

Inertial sensors enable motion gesture recognition for human-robot interaction and control. By analyzing patterns of acceleration and angular velocity, robots can detect specific motion gestures or commands made by users and interpret them as control inputs. Motion gesture recognition is used in applications such as gesture-based control interfaces, virtual reality systems, and wearable devices, allowing users to interact with robots using natural hand movements.

- Vibration Analysis and Monitoring:

Inertial sensors enable vibration analysis and monitoring for condition monitoring and predictive maintenance in robotics applications. By measuring vibrations in the robot’s body or mechanical components, accelerometers can detect abnormal vibration patterns indicative of mechanical faults or wear. Vibration analysis enables robots to identify potential issues early, perform predictive maintenance, and prevent unexpected downtime or failures in industrial or mobile robotic systems.

Significance of Inertial Sensors in Robotics:

- Autonomous Navigation and Control:

Inertial sensors play a crucial role in autonomous navigation and control for robots, providing essential input for motion planning, trajectory generation, and stabilization. By sensing changes in motion and orientation, inertial sensors enable robots to navigate through dynamic environments, avoid obstacles, and maintain stable motion trajectories. Inertial sensors are essential components of autonomous systems such as drones, mobile robots, and unmanned vehicles, enabling them to operate independently in complex and unpredictable environments.

- Adaptive Motion Control and Agility:

Inertial sensors enable robots to exhibit adaptive motion control and agility, adjusting their behavior and trajectory in response to changes in the environment or task requirements. By analyzing inertial measurements in real-time, robots can detect disturbances, changes in terrain, or unexpected obstacles and adapt their motion strategy accordingly. Inertial sensors enable robots to perform dynamic maneuvers, stabilize their motion, and respond quickly to changing conditions, enhancing their agility and versatility in various robotics applications.

- Precise Positioning and Localization:

Inertial sensors provide precise positioning and localization capabilities for robots, enabling them to determine their position and orientation accurately in three-dimensional space. By integrating inertial measurements over time, robots can estimate their trajectory and track their position relative to a reference frame. Inertial sensors are used in conjunction with other localization techniques such as GPS, visual odometry, or environmental mapping to provide accurate and reliable position estimates for navigation in indoor or outdoor environments.

- Compact and Lightweight Design:

Inertial sensors offer a compact and lightweight design, making them suitable for integration into portable or wearable robotic systems. MEMS-based inertial sensors have a small form factor and low power consumption, allowing them to be embedded into drones, wearable devices, or mobile robots without adding significant weight or size. The compact and lightweight design of inertial sensors enables the development of agile, versatile, and mobile robotic platforms for various applications in fields such as healthcare, agriculture, or search and rescue.

Continuous Monitoring and Predictive Maintenance:

Inertial sensors enable continuous monitoring and predictive maintenance for robotic systems, detecting changes in motion or mechanical condition indicative of potential faults or failures. By analyzing inertial measurements over time, robots can identify abnormal vibration patterns, excessive wear, or mechanical stress on components and alert operators to take preventive action. Continuous monitoring and predictive maintenance help prolong the lifespan of robotic systems, reduce downtime, and improve overall reliability and performance in industrial or mission-critical applications.

In conclusion, inertial sensors stand as essential components in the realm of robotics, providing crucial input for navigation, motion control, and stabilization. From sensing changes in motion and orientation to enabling autonomous navigation and adaptive motion control, inertial sensors play a vital role in enhancing the agility, autonomy, and reliability of robots in various applications and environments. As robotics technology continues to advance and evolve, the significance of inertial sensors as navigational aids and internal compasses remains steadfast, shaping the future of automation and intelligent robotics.